When testing components that have asynchronous behavior you need to use FlexUnit's addAsync() method in order to correctly handle the events that fire. The reason for this is that unless you tell FlexUnit that you are expecting an asynchronous event, once your test method finishes FlexUnit will assume that the test is done and there were no errors. This can lead to false positives and annoying popup error dialogs with an assert fails. Below are some examples of how to use addAsync().

I'll start off with what not to do so you can get an idea of why you need addAsync(). Let's write a simple test that verifies that the flash.utils.Timer class fires events the way we think it should. The first attempt might look like this:

package com.example {

import flexunit.framework.TestCase;

import flash.utils.Timer;

import flash.events.TimerEvent;

public class TimerTest extends TestCase {

private var _timerCount:int;

override public function setUp():void {

_timerCount = 0;

}

public function testTimer():void {

var timer:Timer = new Timer(3000, 1);

timer.addEventListener(TimerEvent.TIMER, incrementCount);

timer.addEventListener(TimerEvent.TIMER_COMPLETE, verifyCount);

timer.start();

}

private function incrementCount(timerEvent:TimerEvent):void {

_timerCount++;

}

private function verifyCount(timerEvent:TimerEvent):void {

assertEquals(1, _timerCount);

}

}

}

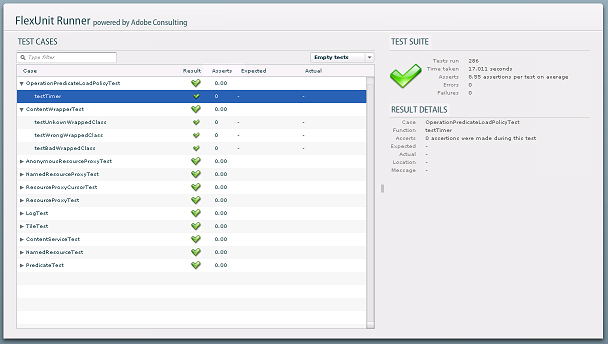

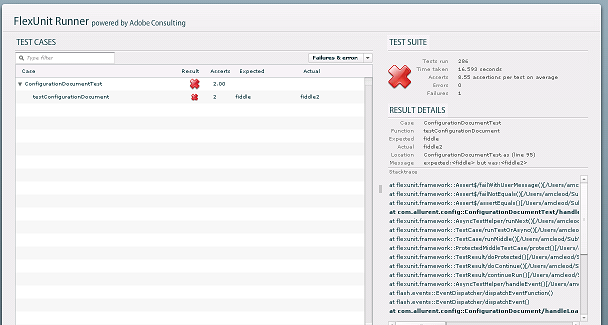

Nothing fancy here, we declare a single test method, create the Timer, and then start it. It should run once and then stop. If you add this test to your test suite and run it, you get a nice green bar. This is a false positive! The assertEquals() in the verifyCount() method didn't really contribute to the test passing. Try changing it to:

assertEquals(2, _timerCount);

When you rerun the test you'll get another green bar. Then a few seconds later an error dialog box pops up with the following message:

Error: expected:<2> but was:<1>

at flexunit.framework::Assert$/flexunit.framework:Assert::failWithUserMessage()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\Assert.as:209]

at flexunit.framework::Assert$/flexunit.framework:Assert::failNotEquals()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\Assert.as:62]

at flexunit.framework::Assert$/assertEquals()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\Assert.as:54]

at com.example::TimerTest/com.example:TimerTest::verifyCount()[C:\Work\Eclipse3.2\Fresh\AsyncTest\src\com\example\TimerTest.as:26]

at flash.events::EventDispatcher/flash.events:EventDispatcher::dispatchEventFunction()

at flash.events::EventDispatcher/dispatchEvent()

at flash.utils::Timer/flash.utils:Timer::tick()

But, but, the test bar was green! FlexUnit didn't know you where waiting for an event, as a result when the testTimer() method finished, that test was clean. The error dialog pops up because FlexUnit isn't around to catch the error and turn it into a pretty message. This also shows that even though the test finished our object was still running in the background. We can fix this first issue by adding a tearDown() method and use addAsync() to wrap the function that should be called along with specifying the maximum time to wait for that function to be called. The function returned by addAsync() is used in place of your original function. In the example above, the second listener function passed into the addEventListener() call is where addAsync() will come into play. The changes to TimerTest look like this:

private var _timer:Timer;

override public function tearDown():void {

_timer.stop();

_timer = null;

}

public function testTimer():void {

_timer = new Timer(3000, 1);

_timer.addEventListener(TimerEvent.TIMER, incrementCount);

_timer.addEventListener(TimerEvent.TIMER_COMPLETE, addAsync(verifyCount, 3500));

_timer.start();

}

I've taken the original function and wrapped it in an addAsync() and added the maximum time that the function can take to be called. Since my Timer is set to run in 3000ms I gave myself a little buffer. With this change and the assert testing for a count of 2, I'll get a red bar when the test runs. Additionally I added a tearDown() method so that regardless of what happens in the test the Timer will stop running. When doing asynchronous testing it is important to clean up like this otherwise you can get objects hanging round in memory doing things you don't want. On that note I'd also recommend that the event listeners added to the timer use weak references. That way in the tearDown() function nulls out the Timer that object can be garbage collected. The update code would look like this:

_timer.addEventListener(TimerEvent.TIMER, incrementCount, false, 0, true);

_timer.addEventListener(TimerEvent.TIMER_COMPLETE, addAsync(verifyCount, 1500), false, 0, true);

Now that we added in that timeout to the verifyCount() call, you'll also get a red bar if the function isn't called in the timeout specified. Drop the timeout to 1500ms and rerun the test. You should now get the following error:

Error: Asynchronous function did not fire after 1500 ms

at flexunit.framework::Assert$/fail()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\Assert.as:199]

at flexunit.framework::AsyncTestHelper/runNext()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\AsyncTestHelper.as:96]

at flexunit.framework::TestCase/flexunit.framework:TestCase::runTestOrAsync()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\TestCase.as:271]

at flexunit.framework::TestCase/runMiddle()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\TestCase.as:192]

at flexunit.framework::ProtectedMiddleTestCase/protect()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\ProtectedMiddleTestCase.as:54]

at flexunit.framework::TestResult/flexunit.framework:TestResult::doProtected()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\TestResult.as:237]

at flexunit.framework::TestResult/flexunit.framework:TestResult::doContinue()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\TestResult.as:109]

at flexunit.framework::TestResult/continueRun()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\TestResult.as:79]

at flexunit.framework::AsyncTestHelper/timerHandler()[C:\Documents and Settings\mchamber\My Documents\src\flashplatform\projects\flexunit\trunk\src\actionscript3\flexunit\framework\AsyncTestHelper.as:121]

at flash.utils::Timer/flash.utils:Timer::_timerDispatch()

at flash.utils::Timer/flash.utils:Timer::tick()

That's the quick introduction to addAsync(). Now onto some of the additional features of addAsync() that can be helpful. Say we wanted to test that a Timer is correctly called twice within a specified period of time. The incrementCount() function can easily be reused but the verifyCount() method has a hard coded assertEquals() in it. The optional 3rd argument to addAsync() is an argument called passThroughData, which can be anything. In this case I'm going to pass what I expect the count to be when the Timer finishes allowing me to reuse the same verify function. With these changes the functions now looks like this:

public function testTimer():void {

_timer = new Timer(1000, 1);

_timer.addEventListener(TimerEvent.TIMER, incrementCount, false, 0, true);

_timer.addEventListener(TimerEvent.TIMER_COMPLETE, addAsync(verifyCount, 1500, 1), false, 0, true);

_timer.start();

}

public function testTimer2():void {

_timer = new Timer(500, 2);

_timer.addEventListener(TimerEvent.TIMER, incrementCount, false, 0, true);

_timer.addEventListener(TimerEvent.TIMER_COMPLETE, addAsync(verifyCount, 1500, 2), false, 0, true);

_timer.start();

}

private function verifyCount(timerEvent:TimerEvent, expectedCount:int):void {

assertEquals(expectedCount, _timerCount);

}

In both cases I'm passing along the expected count and have created a generic verify method. The object that you pass can be anything which makes it a very powerful way to write generic asynchronous event verifiers. But wait there's more!

The last optional argument to addAsync() is a way to verify that the event didn't happen. Instead of getting a red bar and the "Error: Asynchronous function did not fire after 1500 ms", you can verify that based on the event not firing, everything is as it should be. For example I could verify that after 1500ms a 1000ms Timer only fired one event and the complete event never fired. This last version of the test class does that. I also modified things a little since if you include passThroughData, it gets passed to both the regular function and the failFunction. This isn't a bad thing as it provides a way to write a generic failFunction handler like the way the generic verify handler was written. Note that the failFunction handler doesn't need to worry about getting an event passed in like the normal listener function since the event didn't fire.

package com.example {

import flexunit.framework.TestCase;

import flash.utils.Timer;

import flash.events.TimerEvent;

public class TimerTest extends TestCase {

private var _timerCount:int;

private var _timer:Timer;

override public function setUp():void {

_timerCount = 0;

}

override public function tearDown():void {

_timer.stop();

_timer = null;

}

public function testTimer():void {

runTimer(1000, 1, 1500, 1);

}

public function testTimer2():void {

runTimer(500, 2, 1500, 2);

}

public function testNotDone():void {

runTimer(1000, 2, 1500, -1, 1);

}

private function runTimer(delay:int, count:int, timeout:int, goodCount:int, badCount:int = -1):void {

_timer = new Timer(delay, count);

_timer.addEventListener(TimerEvent.TIMER, incrementCount, false, 0, true);

_timer.addEventListener(TimerEvent.TIMER_COMPLETE, addAsync(verifyGoodCount, timeout, {goodCount: goodCount, badCount: badCount}, verifyBadCount), false, 0, true);

_timer.start();

}

private function incrementCount(timerEvent:TimerEvent):void {

_timerCount++;

}

private function verifyGoodCount(timerEvent:TimerEvent, extraData:Object):void {

if (extraData.goodCount == -1)

{

fail("Should have failed");

}

assertEquals(extraData.goodCount, _timerCount);

}

private function verifyBadCount(extraData:Object):void {

if (extraData.badCount == -1)

{

fail("Should not have failed");

}

assertEquals(extraData.badCount, _timerCount);

}

}

}

Feel free to play with this final version to test how different asynchronous scenarios are handled in FlexUnit.

Some additional notes:

Make sure the listener function name doesn't start with test, otherwise FlexUnit will think that it is a test method and try to run it.

I've noticed some odd behavior with multiple addAsync()s set at the same time. In general you only want to have one outstanding addAsync() at a time.

If you specify passThroughData and a failFunction, the passThroughData will get passed to both the function and the failFunction.

Make sure that you cleanup any objects that could still fire events in your tearDown() method and either remove event listeners or make them weak. There is more to this topic but I'll cover that in another post.

Tags: as3 flex flexunit testing